Why and how we went off the beaten track to showcase a concrete use of AI to support the creative process.

The why

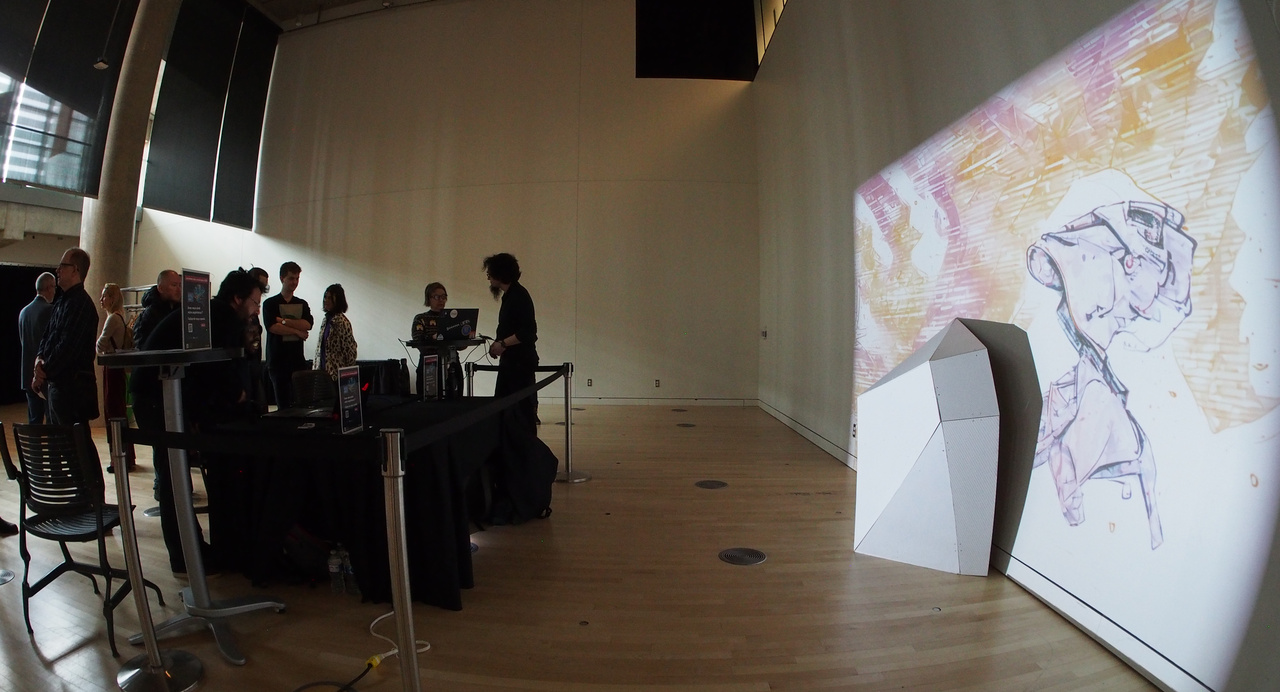

As part of an event dedicated to AI held on March 23 and 24, 2024 at the BAnQ, we were invited to set up and present a workshop introducing deep learning’s ability to support the creative process. It’s true that using AI to create content is one of the most illustrated uses today, and also one of the most questioned: copyright, the creativity of AI models themselves, the replacement of creative people by algorithms, are just some of the many issues being discussed, including prominent artists.

Our aim with this workshop was to encourage discussion with participants on all subjects related to generative models. And to push our thinking on the usefulness of such tools, we wanted to get away from the obvious uses such as those offered by the OpenAIs of this world, i.e. to go further than simply generating an image displayed on a screen. It was therefore natural for us to look for a way of applying diffusion models to digital art, via a dedicated digital installation.

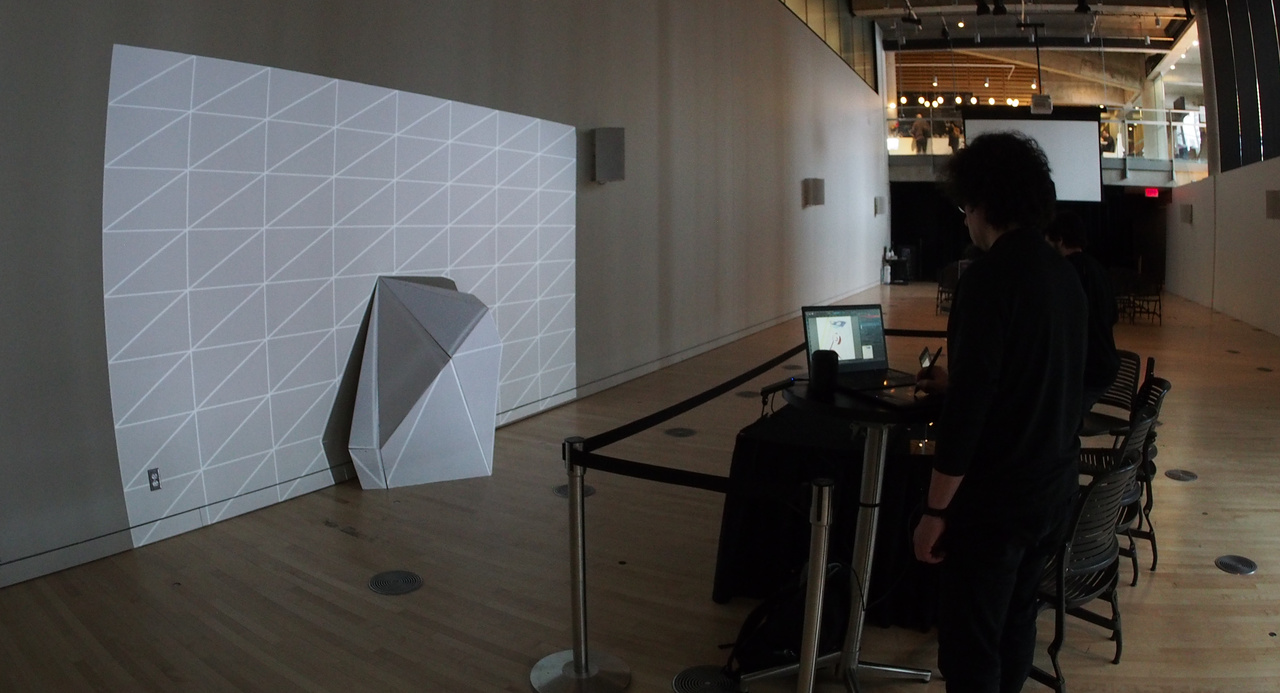

Our proposal therefore evolved from a workshop consisting of a few creative stations enabling participants to test their ability to take advantage of AI to facilitate their 2D graphic research, to a digital installation including architectural projection on a sculpture designed for the purpose. The idea was to show that AI can be a real asset to the creative process when engaged with an unusual medium.

The how: physical construction

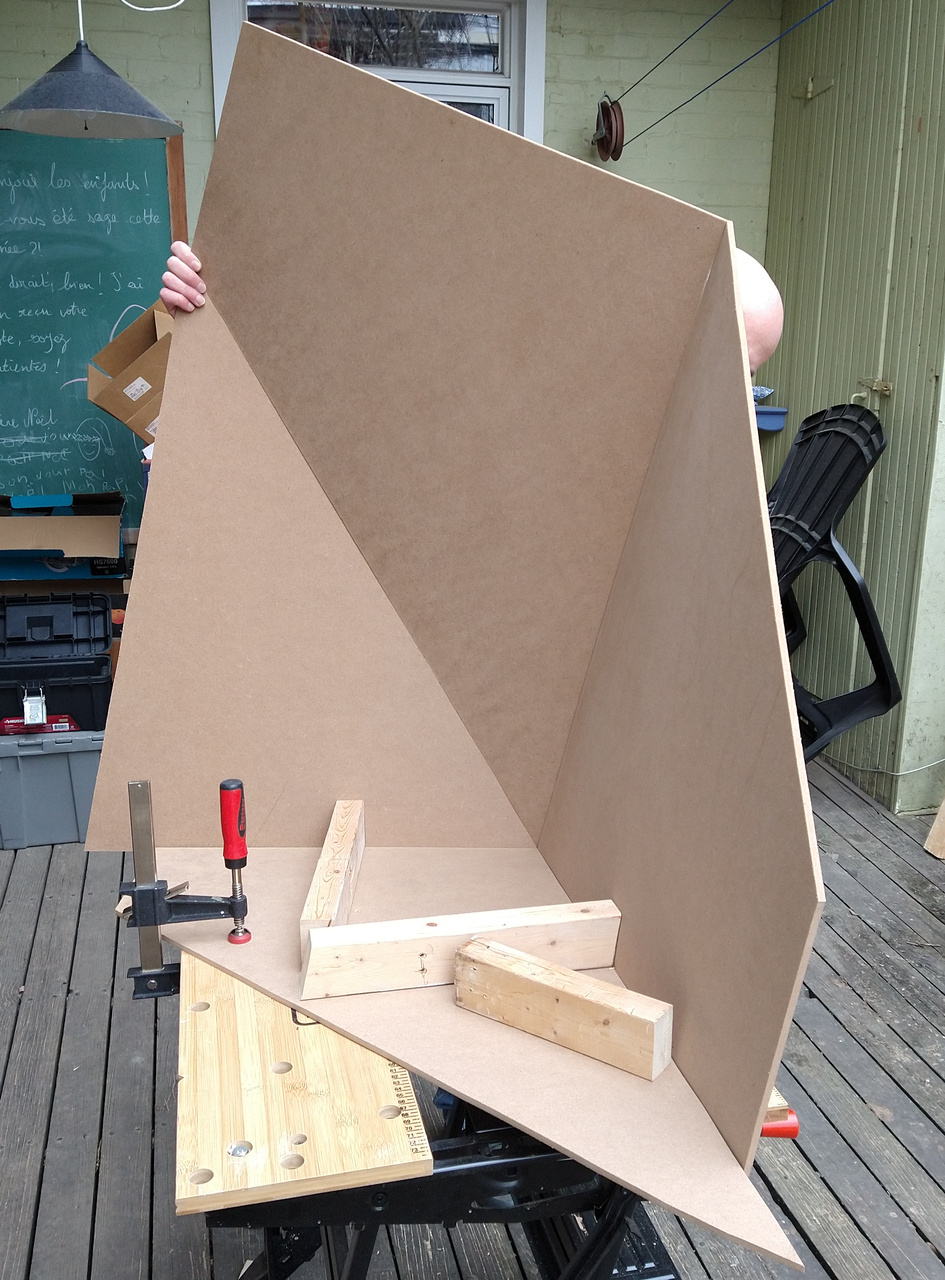

Let’s start by describing the sculpture. We wanted a projection geometry that would be neutral enough not to influence the participants too much, and let them explore freely. We therefore settled on a patatoid, with a reduced number of polygons to facilitate its construction. To increase the range of possibilities, we chose to place this object against a wall, with one of its faces flat, enabling us to extend the content beyond the sculpture. The object was modeled using Blender, and the actual assembly was done with bare hands (or almost) using MDF sheets and hinges, all painted white, of course.

When it comes to hardware, we’ve chosen to keep things simple for two reasons: the sculpture itself requires a great deal of logistical effort to transport; and the manual manufacturing process doesn’t allow us great precision in our measurements. By using a single video projector, projection calibration is greatly simplified. As for the design workstations, two laptops with graphics tablets were used. We opted for out desktop computers equipped with suitable graphics cards to host the diffusion models. They were accessible via a VPN.

The how: software construction

On the software side, we used the following tools:

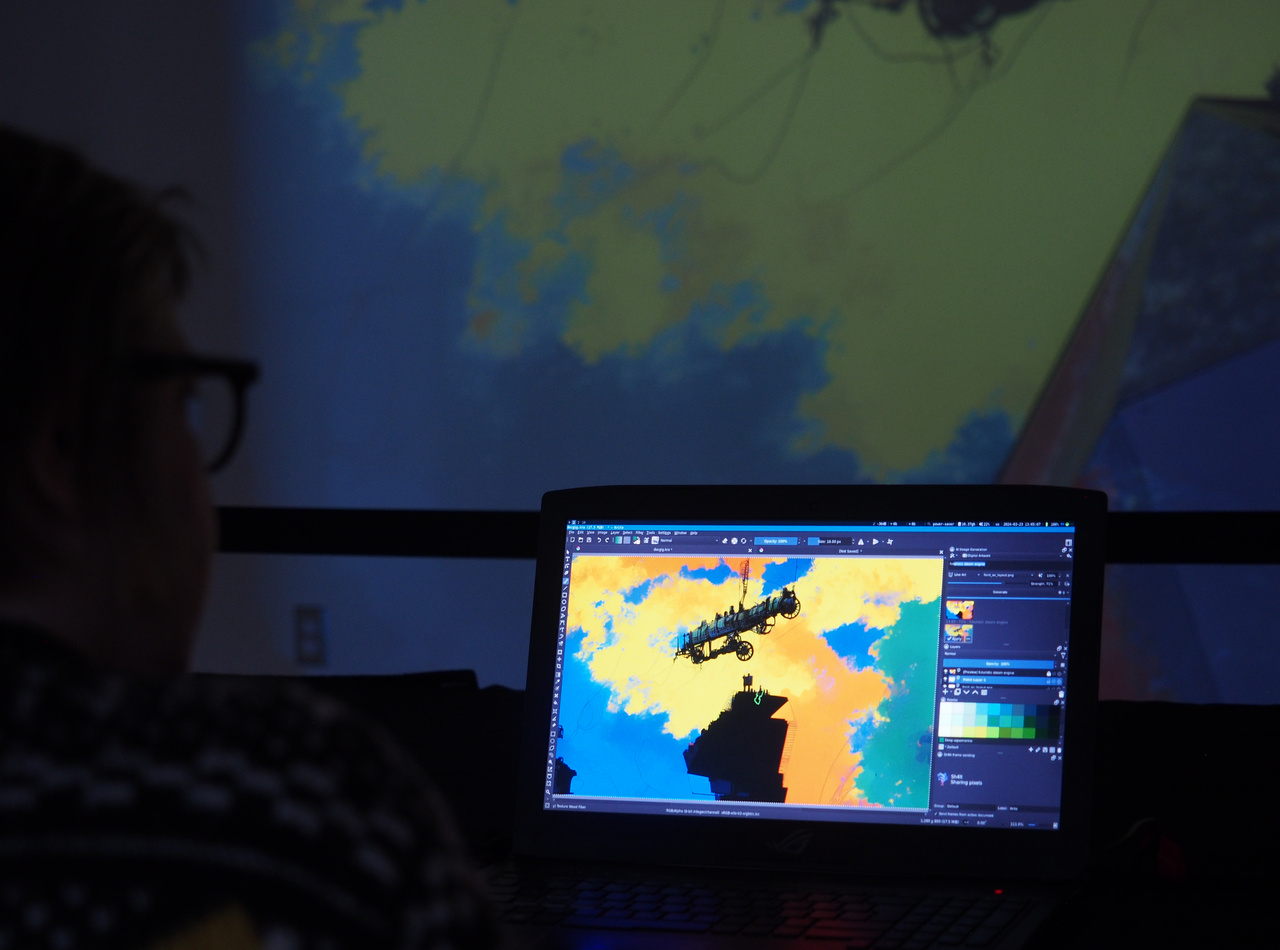

- Krita for drawing

- ComfyUI to run the diffusion models

- Krita-AI to link those

- Splash for video mapping

What’s missing from this list is something to make Krita and Splash communicate, so that one can send the images created (taking advantage of the AI) to the other. We could have used OBS Studio and captured part of the display to send it to Splash in NDI, but that wouldn’t have been very robust in use. We therefore developed an addon for Krita enabling Krita to share edited images with other processes on the computer, based on a library of our own design that enables just such communication: Sh4lt. Sh4lt support has also been added to Splash for the occasion.

And when it came to transferring images between the two laptops, we took advantage of the SRT protocol through GStreamer, to which Sh4lt support was also added. With all this we were able to link all this software together to enable two participants to collaborate on the creation of content projected onto the installation.

Thoughts and possibilities

From the point of view of satisfying our objectives, we have implemented a successful format of the workshop. The participants asked a lot of questions, concerning the uses of generative AI, its technical implementation, implications in the cultural ecosystem, or ethical and intellectual property considerations.

In terms of our own desires for the outcome of the workshop, we observed that the use of the projection surface as a creative framework was relatively limited, and collaboration between users of the two stations almost non-existent. But these are new and innovative approaches for us, so it’s not surprising that participants with no particular knowledge of these tools were not receptive to these uses. Nonetheless, we intend to continue developing them through future projects.

This intervention has convinced us of the generative AI’s potential to assist the exploration phases, particularly when it comes to unusual display devices. It is true, that architectural projection on a patatoid is not exactly common practice, so we’ve enjoyed experimenting with ControlNet across ComfyUI to constrain generation to geometry. Much remains to be done in terms of exploration, however.

Finally, this workshop also enabled us to experiment with a reverse process for architectural projection: we started from a 3D model to manufacture the physical structure, and not vice versa. Due to a limited budget, we made the whole thing by hand, but in the future we plan to apply tools such as projecting plans directly onto a sheet of MDF to facilitate the transfer of dimensions (another application of videomapping!), or even take advantage of laser cutting if the dimensions aren’t too large.